1. Introduction

Here we demonstrate the effects that slow performance of backend servers and databases can have on an API Gateway under load. Using the java-TestProxy tool we can add delays to sockets and simulate problems that may otherwise not be reproducible outside of the production environment.

This article builds on some previous articles:

TechTip - using java-TestProxy tool to add delays and test API Gateway under stress and failure conditions.

Enable JMX monitoring access to the API Gateway JVM

Analyzing API Gateway trace logs for performance issues (another tool to use)

We provide video demonstration of the load testing the API Gateway as we degrade the performance of backend server and database server in a few common configurations where problems can occur.

TechTip - using java-TestProxy tool to add delays and test API Gateway under stress and failure conditions.

2. Running under Optimal conditions

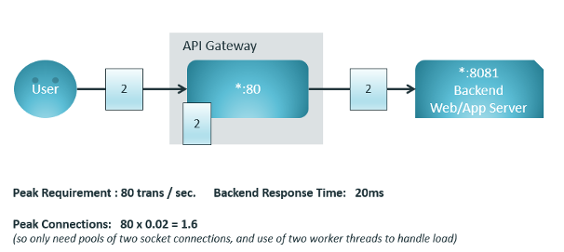

Our basic system is where API Gateway is being used as a basic proxy. Our target load will be 80 TPS, and under working conditions will have less than 20ms response time :

Our peak requirements for concurrent transactions (ie number of sockets we will need open, and number of active worker threads) will be less than 2. Two threads are enough to handle all the traffic at 80 trans/sec & 20ms response time.

3. Running with backend delays

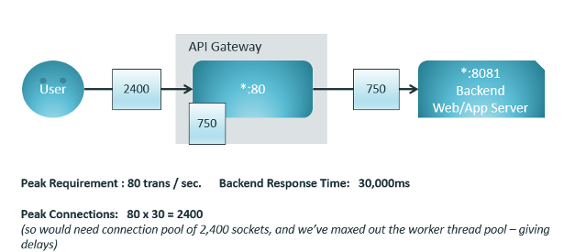

The most common cause of failure in production is when a back-end server starts to respond slower than expected. For example if the backend server goes from a 20ms response time to a 30sec response time - then we need the following :

The max-capacity is = num-parallel * transaction-time. When we had 20ms transaction time, we did not need to keep many transactions running in parallel (2 was enough). Now we have trans time of 30sec, we need to run 2,400 open requests. To maintain the same throughput/capacity as the transaction-time increases, we need to increase the width of how many transactions we can run at the same time.

However, we have a "bottleneck" with the default io.httpMaxConcurrency=750, then the API Gateway will only process the first 750 requests, those will be send onto the backed, where they will take 30sec, and only as requests finish will new requests be processed. A new request arriving at the gateway will wait [(2,400 / 750 ) * 30sec = 96sec] and then take 30sec to process.

The max-capacity if API Gateway with a MaxConcurrency=750 and with (degraded) performance of 30sec response time, is : (750 / 30) = 25 requests / sec, 25 TPS.

Since in PROD environment (or with proper load test) the requests will still keep arriving at the API Gateway at rate of 80 TPS, the API Gateway will start refusing to take new requests, and delays even longer than 30sec will be felt for those transactions that are processed.

4. Test Setup

We've used the java-TestProxy to enable us to vary the delayed responses from the backed. As described here:

TechTip - using java-TestProxy tool to add delays and test API Gateway under stress and failure conditions

Enable JMX monitoring access to the API Gateway JVM

So we are simulating bad performance from the backend server.

TechTip - using java-TestProxy tool to add delays and test API Gateway under stress and failure conditions.

We then vary the backend delay from 0sec, to 5sec up to 60sec to simulate stress and also failure of the test system.

5. Example 1 - Testing Simple Delay on one service.

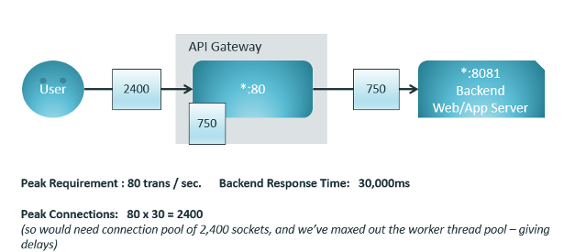

Here is the basic test case, where we run single backend with increasing delays until the API Gateway starts producing errors.

The setup is like this :

And the video of running the test case is here:

6. Example 2 - Testing Delay on one service can affect other services.

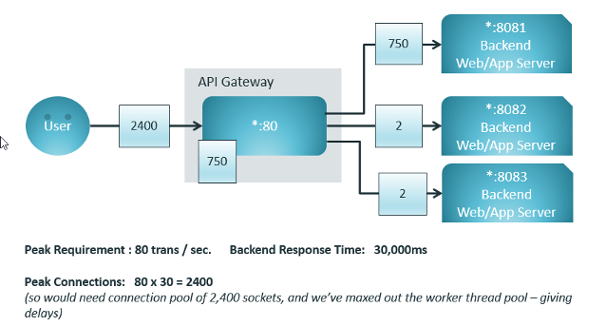

When we have multiple services that are offered via the API Gateway, when one of them performs badly then it also affects the availability of the other services : For our test setup we have three backend services, and we add delays to one of the;

And here is the video for running this test-case :

7. Example 3 - A Looped Request can double your delay effect

Another common setup is for some API Gateway services to make a local callback. This can have a multiplying effect on the thread/socket usage, and also means the request can run the gauntlet of waiting/trying to find a free thread/socket twice in a transaction causing additional delays. The test setup we have is as follows, two backend services, one affected by the delay and one service that does a callback to API Gateway before passing the request onto the backend.

And here is the video of running that test case:

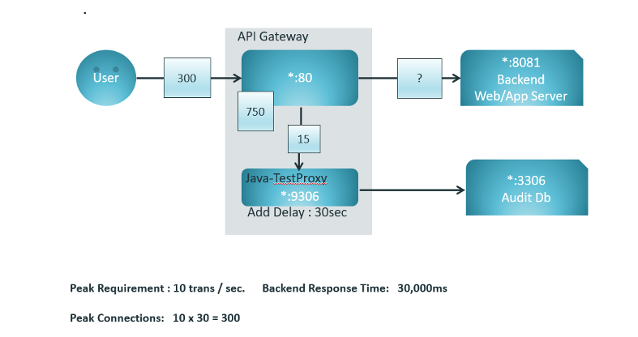

8. Example 4- Adding delay to Audit DB connection

Here we test conditions where a remote "Audit" database is responding badly. We have setup a remote audit database, and then access to the jdbc is via our java-TestProxy so we can add delays on any audit records that are written to the database. Here is out test setup :

And here is the video of running that test-case :

Note: In this setup, we are running the java-TestProxy on the API Gateway itself, whereas in the other examples we were running the proxy on the backend server.

9. Summary

I have been involved in a number of cases where production systems did not perform as expected under load, or under some unusual condition, say network usage spiked and caused delays as someone copied several gig of data, or someone runs a backup or scan of a database. Sometimes these cases have been long lasting as the conditions were not reproduce-able in non-production environment. That made it hard to prove the cause had been identified, and that that suggested configuration changes were going to resolve the issue. Usually it's taken several iterations before the problem is resolved. And there is always the possibility that max capacity has been exceeded and more hardware is needed. Hopefully what is included here can help understanding the issue of exceeding capacity under poor performance conditions, and also how reproduce or simulate those bad performance conditions in a test environment.

Cheers - Mark

PS: And apologies in advance for the quality of the discussion on the video, I figured quick editing was better than not getting it done :-)